State of the count

As I write Yes is at 39.27%. This number has been falling gradually as postals are added - on counting night it looked like Yes could go as high as 44 but both the on-the-day/prepoll and on-the-day/postal differences were large. Antony Green tweeted on Monday that day booth votes are 43.8% for Yes, prepolls 35.5% and postals 31.3%. Most postals that will arrive have already been counted. Depending on what happens with prepolls and absents I'm roughly expecting turnout to be something like 86-87% (down a few points on 2022), but it might still be lower than that range or I think less likely higher; I've seen claims from "mid 80s" up to mid-88s.

I could see remaining postals perhaps taking Yes down below 39 if they are disproportionately from places like Flynn (where Yes is running at below 9% in postals) - and Queensland is undercounted at the moment. But even so I would expect absents and provisionals to break to Yes more than dec prepolls break to No so at this stage I'd think that Yes stays in the low 39s.

Yes appears to have won 27 divisions called by ABC, and genuinely leads in five more. In general the Voice has performed very similarly to the 1999 Republic vote in terms of the good (mostly but not all inner city) and bad electorates. The ABC has not yet called Curtin, Mackellar, Maribyrnong, Franklin, Chisholm, Hotham, Reid or Isaacs. (In Reid there was a transposition error in the Concord PPVC, which has been fixed, putting Yes into the lead.) I expect Yes to prevail in Curtin, and No to win Chisholm and Hotham where it already leads. I think Yes will hold on in Maribyrnong and Franklin now and I'd like to see more action in the others though there is a good chance Yes will hold them all. I won't be spending a great deal of time on these because I don't have any and they have no actual consequence. Have had a lot of questions about Bradfield (notably the sole Liberal seat to vote Yes while many Labor seats voted No) but the Yes lead there is too large.

The state pictures with my pre-vote polling aggregated estimates in brackets on current figures are below, noting that nationally Yes is running 2% below the two-answer polling average

NSW 40.3 (43.5)

Vic 45.0 (46.1)

Qld 31.0 (33.7)

WA 36.2 (36.0)

SA 35.3 (39.9)

Tas 40.5 (45.5)

The combined Territories vote is 55.0 (was 49.5 in my abandoned attempt to estimate it from poll remainders).

Aside from Yes slightly underperforming the two-answer average across the board (which is quite normal for a referendum) the South Australian result stands out. The final Newspoll (35.5) nailed it but no other poll was close. The fourth state is currently WA not SA, and WA is running three points below the national average, meaning the double majority burden has ended up at about its average figure after looking in polls like it would be smaller or nothing.

There was a divergence of polling samples in Tasmania and I used a long-term average for Tasmania because I did not think the small Morgan and Resolve subsamples were reliable, having seen small Tasmanian subsamples in national polling fail many times before. Polling by YouGov/Newspoll continually had Tasmania near the national average, including in the final Newspoll (40.9), but I had to give the others some weight! The claim was Tasmania would vote Yes because of bipartisan state support. In fact the Liberal support for the Voice was mostly just the Premier, with all Senators and at least one state Minister opposing it, not to mention two defectors whose flight to the crossbench has damaged the government's authority anyway. While supported by some prominent local elders such as Rodney Dillon and Patsy Cameron, the Voice was more prominently opposed by the Tasmanian Aboriginal Centre including high-profile veteran activist Michael Mansell, and by the Circular Head Aboriginal Corporation.

The gravity of No

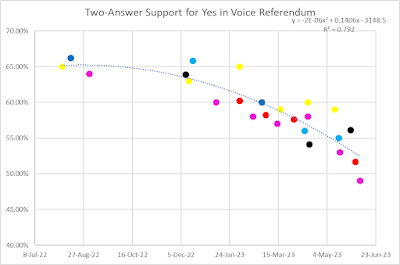

I started following the Voice polls at the end of last year. I'd been meaning to write something about it on here earlier but it took me into April to find the time and inspiration amid a difficult start to 2023. Just after Peter Dutton announced the Coalition's formal opposition I wrote this (which I believe to be the first pseph article that tracked the Voice support) and I made this baby Voice two-answer graph:

I found it interesting that the fall seemed to be accelerating, given I had seen Peter Brent's pieces from January (linked in the article) that suggested that the Voice was doomed. I felt that putting a curved trendline on it was a bit out on a limb (it's only 16 data points) but I put it out there like that anyway.

At this stage it still seemed possible the decline would pull up but as it accelerated it became clear Yes was in a lot of trouble. By the time of the second article, Yes was at 54%.

And on it went as this amazing carnival of polls brought more and more information, but still there was never any point at which the decline actually stopped (towards the end it slowed, a bit, though only after applying large corrections for apparent house effects). And finally I ended up with this, where the Yes vote looks like what happens if you throw a rock out a fourth story window horizontally. Trying to save the Voice was like fighting electoral gravity.

Green - Newspoll, Dark Green - YouGov, Magenta - Resolve, Grey - Essential, Dark blue - JWS, Light blue - Freshwater, Black - Morgan, Red - Redbridge, Orange - DemosAU, Purple - Focaldata, Red star - final result (interim, position may change slightly)

While I did use fancier modelling for the close-up tracking, for the long-term tracking a simple quadratic decline worked just fine all the way from the election to the end. There was never any clear inflection point. There was never any evidence (perhaps unlike with the Republic) that anything that happened caused a shift. A future historian, asked from the graph alone to pick the point at which the Liberal Party announced its admittedly pre-telegraphed opposition, would have no chance. And here we are with the Voice referendum defeated - it may seem like an amazing slope and margin, but it's just a bog-standard mid-term Labor referendum loss historically. Even Curtin couldn't win one against the opposition he had scattered to the winds the year before. I believe a reason for the trend is that much opposition to the Voice spread organically. The No side were (apparently) out-resourced, out-corfluted and out-staffed but it didn't matter.

Indigenous Support Levels

The matter of Indigenous support levels for the Voice was always going to be much scrutinised after the event but what we have seen is a tryhard psephology bonanza from both sides. One of the problems with electoral data is that people are forever trying to use it for things it can't be used for, because voters from different demographics vote together in the same booths. Understanding the data means being upfront about its limitations.

The No supporters in the debate have generally pointed to high No votes in many seats with high Indigenous populations, but this is classic ecological fallacy garbage. No Australian division is majority Indigenous. The divisions with the most Indigenous voters are mostly rural/outback seats where voters would vote No whether there were Indigenous people living there or not. Perhaps they vote No even more because of perceived social conflicts - see comments at the bottom here. There aren't enough Indigenous voters in these seats for even a high Yes Indigenous vote to cancel out a white majority that's voting 20-25% Yes.

Another false No claim involves a correlation between high Indigenous voter percentages and low Yes votes at booth level. Aside from this being unsound for the reason stated above, it is also unsound because it breaks down when the Indigenous voter percentage is very high (see graphs below).

Several Yes supporters have tried to use high Yes votes in majority Indigenous booths in defence of the Yes campaign's farcical decision to highlight and cite a tiny Ipsos sample from January on corflutes claiming 80% support for the Voice (see here for an assessment of that poll). Why the Yes campaign used Ipsos and not even the March YouGov subsample (which was much larger, slightly less cobweb-encrusted and had a better result) will never be known beyond that Yes23 never saw an error too bad for it to make. They left themselves wide open to Indigenous subsamples from other pollsters coming out with lower readings before voting day and that is exactly what occurred.

The biggest problem with the pitiful apologism for these corflutes is that remote Indigenous voters are only one-sixth of all Indigenous voters, and an especially disadvantaged sixth; they could well be more strongly yes than rural town or urban Indigenous voters, and the late Resolve and Focaldata subsamples give some support to that view. The other problem is that even in those booths, getting the Indigenous vote above 80 across the boards is tough sledding. It's 63% in booths that are more than 50% self-identified Indigenous by census data, and 71% in the top 25 booths which are all above 80% self-identified. In the latter booths, there's not a significant correlation between First Nations percentage and Yes.

Simon Jackman has put up a great graphing tool where one can look at the pattern between booths by various statistics. Here's the pattern for Queensland by Indigenous status (it's similar in NT and WA with many near-100% Indigenous booths, but not in NSW where there are some booths with quite high Indigenous percentages but low Yes votes.)

The Yes vote is high in low-Indigenous suburban booths, bottoms out at about 8% Indigenous, and then increases as the share of Indigenous voters ramps up and starts to counteract non-Indigenous No voters, to about 75% at effectively all-Indigenous. It may not be only them driving it; it's possible that in very Indigenous booths the few non-Indigenous voters would also be very pro-Yes.

The other problem with "80% support" and related claims is that whatever the Yes vote, it's only the support rate among those voters who voted, and turnout in remote areas is often low.

Poll Denier Bait And Switch

Poll denial has largely gone to ground on social media because those guilty of it are too gutless to admit their error and encourage their followers to not repeat their mistake. But a small number have done the bait and switch that I could set my watch by on Saturday night - from "The polls are wrong! Yes will win easily!" to "The polls were right because they caused this! Ban polls!"

The main theory being advanced by the poll-deniers-turned-poll-banners is bandwagon effect: that voters switched to No to be on the side that's popular. But this makes no sense since initially Yes was winning, by a lot, with No a relatively fringe position, seldom reaching 20 with undecideds in before last August. Furthermore the same pattern of decline has been seen in many past referendums even when polling was very sparse and there was not a constant picture of momentum, most notably the 1951 Communist ban referendum (a rich source of parallels to this one generally). There's no reason to advance a theory about polls causing rather than measuring the Yes decline when it can be explained simply by the effect of increasing debate about a novel proposal, organic spread of social license to vote No and the ease with which No campaigns tap into fears and cautiousness.

At this vote, poll and psephology denial, or at least irrational optimism in the face of obvious patterns, was a systematic failure of the Yes campaign from the PM and Noel Pearson down - not just a few randoms on Twitter. The view that declining polls were irrelevant and historic psephology could be ignored blighted the Yes approach from the start. Instead of encouraging the campaign to pay heed and switch tack, Yes supporters online (probably thousands of them) then tried to shoot the messengers, attacked polls and people tweeting polling stats and made themselves and their cause look stupid in the process. This should never happen again. People on the left who want to be an antidote to Trump and "post-truth politics" must realise that with that comes a responsibility to be more interested in the facts than in repeating unchecked junk from some fellow traveller on Twitter.

As for calls for poll blackouts, I have mentioned before that in Chile's somewhat similar referendum last year, in the absence of polls in the final weeks some newspapers didn't just switch to pure issue reporting but instead reported social media analytics studies that found Yes was winning. (No won by a point more than it did here.) One of the advantages of robust polling in a case like this is it prepares those willing to pay attention for what might otherwise be an even more traumatic result. Even had there been a poll blackout, by the time it would have kicked in it was clearly all over.

And no, truth in advertising laws would not have made a jot of difference. Such laws can target only claims that are materially false, not speculative scare campaigns about what might happen or slogans like "it divides us". These calls come from people who pretend the Yes campaign that (among other things) often passed off the Voice as merely "recognition", imitated AEC signage in an echo of the Chisholm Liberal deception and used 10-month old and extremely flimsy polling was some kind of paragon of honesty, integrity and reason. If such laws had existed, Yes would have tripped over them too, while the No campaign would have exploited every breach it committed to claim that officials were biased against it.

I had a possible final section of this article - a verdict on the many problems of the Yes campaign and referendum concept - but it is getting rather long, and thinking about what to put in it is holding up release of this one for too long. So I've decided to put this out as it is; there may be a second part in coming days.

Updates on the count progress may be added at the top.

I am a "referendum poll denier". If a poll sample is random, and people answer honestly, then polls will be accurate. For repeating events, like elections, the honesty of those polled, and randomness of the participants, with respect to their voting intentions can be established over time. For a one off event it is not as clear to me that this is as well established, so I have more uncertainty. Maybe pollsters have more basis for certainty. However the Brexit result gave me pause. I believe the polls were less accurate with Brexit than a typical British election, is this true?

ReplyDeleteMost of those objections are dealt with in C10 here https://kevinbonham.blogspot.com/2023/09/australian-polling-denial-and.html There is the issue that referendum and ballot measure polls frequently underestimate the No option, but it is not clear that applies to online polling.

DeleteThe Brexit poll miss was slightly worse than the average miss for UK elections long-term, but about the same as the error for the two surrounding elections. It got the attention it did only because it had the winner wrong - it predicted Remain winning by a whisker and instead Leave won narrowly.

So many bait-and-switch moments. A common one from the more vocal social media No conspiracists was to look at poll results showing No would win, say something vague and provocative like "I wonder what the Deep State will do to rig the votes now?" and then when the polls turn out to have been pretty accurate, and No comfortably wins, it changes almost immediately to "I guess they couldn't fake enough votes to get Yes over the line."

ReplyDeleteSo instead of Yes going from 'Polls are wrong' to 'Polls are right but causative' those No supporters go from 'Polls are right' to 'Polls must have been rigged since they accurately predicted a rigged outcome'.

Yes I saw quite a bit of this one too.

DeleteWas really hoping for the second part of this article.

ReplyDeleteMay still appear but probably not as quickly; insane electoral reform developments in Tasmania became a higher priority and then I went away on fieldwork. I might even turn it into a bookender for when the count is finished.

Delete